Scale your background job processing using Node.JS

BullMQ is a fast and robust background job processing library for Redis™

import { Queue } from "bullmq";

const queue = new Queue("Paint");

await queue.add("cars", {

color: "blue",

delay: 30000

});import { Worker } from 'bullmq';

const worker = new Worker('Paint', async job => {

if (job.name === 'cars') {

await paintCar(job.data.color);

}

}, { concurrency: 100 });Performance

Loaded with functionality

Advanced features

What is BullMQ?

BullMQ is a lightweight, robust, and fast NodeJS library for creating background jobs and sending messages using queues. BullMQ is designed to be easy to use, but also powerful and highly configurable. It is backed by Redis, which makes it easy to scale horizontally and process jobs across multiple servers.

BullMQ is a rewrite of the popular Bull library by the same authors, but with a new API and a more modern codebase written in Typescript and with a bunch of new features and performance improvements.

BullMQ has been succesfully used in production to implement video transcoding, image processing, email sending, and many other types of background jobs.

Open source under the generous MIT License, without any artificial limitations on the number of workers, concurrency or the like.

Why Message Queues?

Decouple components

Message queues are a great way to decouple your application components. They are also a great way to scale your application by distributing the load across multiple workers.

Increase reliability

Increase reliability by adding retries and delays to your jobs that communicate with other services or components.

Offload long running tasks

Run long running tasks in the background, and let your users continue using your application while the task is running in your pool of workers.

Schedule jobs

Jobs can be delayed, so that they are run at a specific point in time, or you can run a job at a given interval.

Divide tasks into smaller ones

Breaking a large task into smaller, independent jobs can make it easier to scale your application as well as make it more reliable in case one of the jobs fails.

Conform to existing APIs

Limit the speed to which your application can consume external APIs by adding a queue in between and enable a rate limit.

Code Examples

Check out these code snippets to get a feel for how BullMQ works. You can also check out the documentation for more information and examples.

import { QueueEvents } from "bullmq";

const queueEvents = new QueueEvents("Paint");

queueEvents.on("completed", (jobId, result) => {

console.log(`Job ${jobId} completed ${result}`);

});

queueEvents.on("failed", (jobId, err) => {

console.log(`Job ${jobId} failed ${err.message}`);

});

queueEvents.on(

"progress",

( e: { jobId: string; data: number }) => {

console.log(`Job ${e.jobId} progress: ${e.data}`);

}

);import { Queue } from 'bullmq';

const queue = new Queue('Paint');

// Repeat job once every day at 3:15 (am)

await queue.add(

'submarine',

{ color: 'yellow' },

{

repeat: {

pattern: '* 15 3 * * *',

},

},

);import { Worker } from 'bullmq';

const worker =

new Worker('Paint', async job => paintCar(job), {

limiter: {

max: 10,

duration: 1000,

},

});import { Queue } from 'bullmq';

const queue = new Queue('foo');

await queue.add(

'Paint',

{ color: 'pink' },

{

attempts: 3,

backoff: {

type: 'exponential',

delay: 1000,

},

},

);import { FlowProducer } from "bullmq";

const flowProducer = new FlowProducer();

const flow = await flowProducer.add({

name: "Renovate Car",

queueName: "renovate",

children: [

{ name: "paint",

data: "ceiling", queueName: "steps" },

{ name: "engine",

data: "pistons", queueName: "steps" },

{ name: "wheels",

data: "Michelin", queueName: "steps" },

],

});BullMQ Pro

The Next Step in Job Queue Processing

Discover the upgraded BullMQ Pro: A drop-in replacement for the standard BullMQ, delivering exclusive features. Amplify your job processing, scale predictably, and enjoy professional support.

Provided with generous license terms with a pricing that makes sense without any artificial limitations.

Backed by the same creators, it maintains full compatibility while expanding capabilities with each new release.

Grouping

Assign jobs to groups and set rules such maximum concurrency per group and/or per group rate limits.

Batches

Increase efficiency by consuming your jobs in batches, a strategy that minimizes overhead and can boost throughput.

Use Observables

Implement jobs as observables, enabling more streamlined job cancellation and improved job state management.

Professional support

Access professional support directly from the maintainers of BullMQ, ensuring you have expert assistance when you need it.

import { WorkerPro } from '@taskforcesh/bullmq-pro';

const worker = new WorkerPro('myQueue', processFn, {

group: {

limit: {

// Limit to 100 jobs per second per group

max: 100,

duration 1000,

},

// Limit to 4 concurrent jobs per group

concurrency: 4,

},

connection

});import { WorkerPro } from "@taskforcesh/bullmq-pro"

import { Observable } from "rxjs"

const processor = async () => {

return new Observable<number>(subscriber => {

subscriber.next(1);

subscriber.next(2);

subscriber.next(3);

const intervalId = setTimeout(() => {

subscriber.next(4);

subscriber.complete();

}, 500);

// Provide a way of canceling and

// disposing the interval resource

return function unsubscribe() {

clearInterval(intervalId);

};

});

};import { WorkerPro } from '@taskforcesh/bullmq-pro';

const worker = new WorkerPro("MyQueue",

async (job: JobPro) => {

const batch = job.getBatch();

for(let i=0; i<batch.length; i++) {

const batchedJob = batch[i];

await doSomethingWithBatchedJob(batchedJob);

}

}, { connection, batches: { size: 10 } });Pricing

BullMQ is an open source project (MIT Licensed) that is developed and maintained by a small team of developers. If you are using BullMQ in production and would like to support the project, please consider purchasing a license.

BullMQ Pro Standard

Permisive license for all services deployed by a given organization

- Groups

- Batches

- Observables

- Professional Support

BullMQ Pro Embedded

For those looking to embed the library in all products they sell

- Groups

- Batches

- Observables

- Professional Support

- Embeddable in products

$3995/year

or $395/monthly

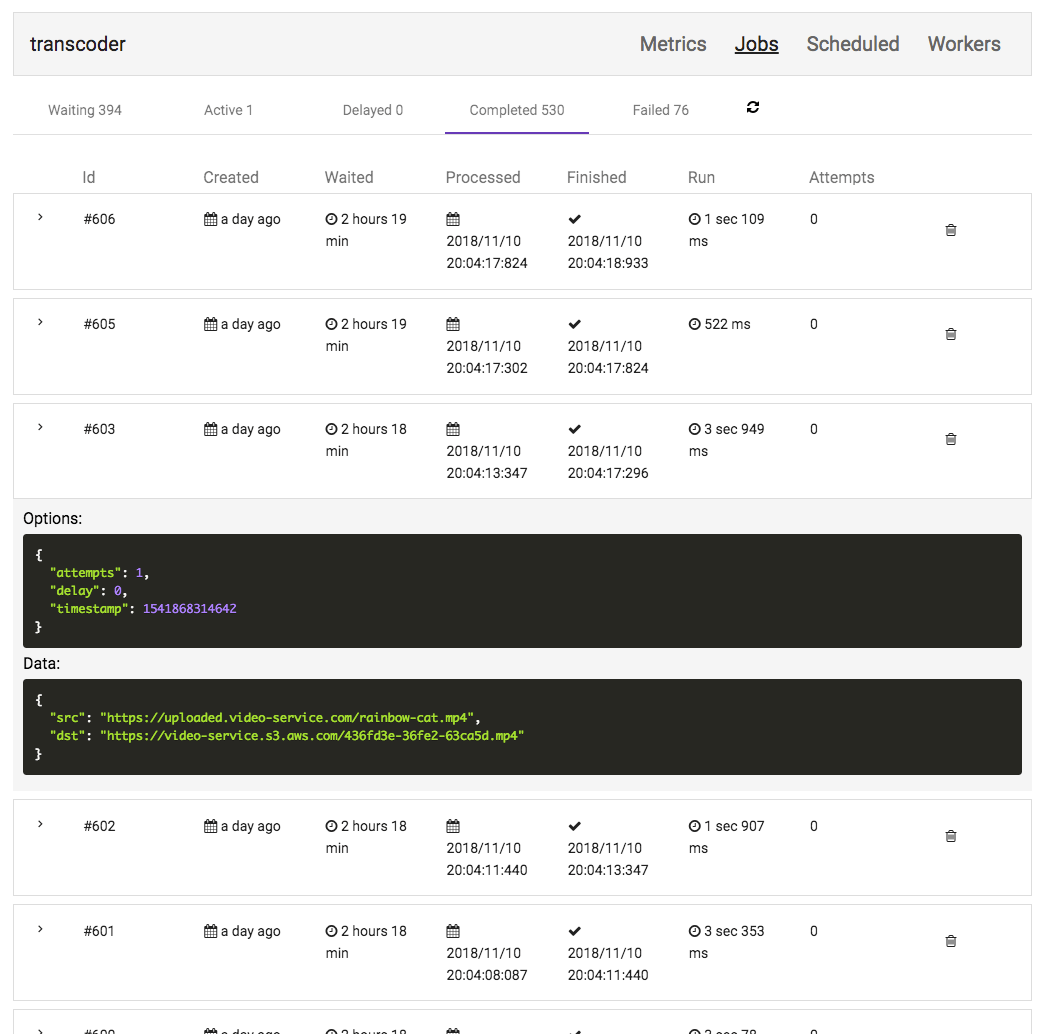

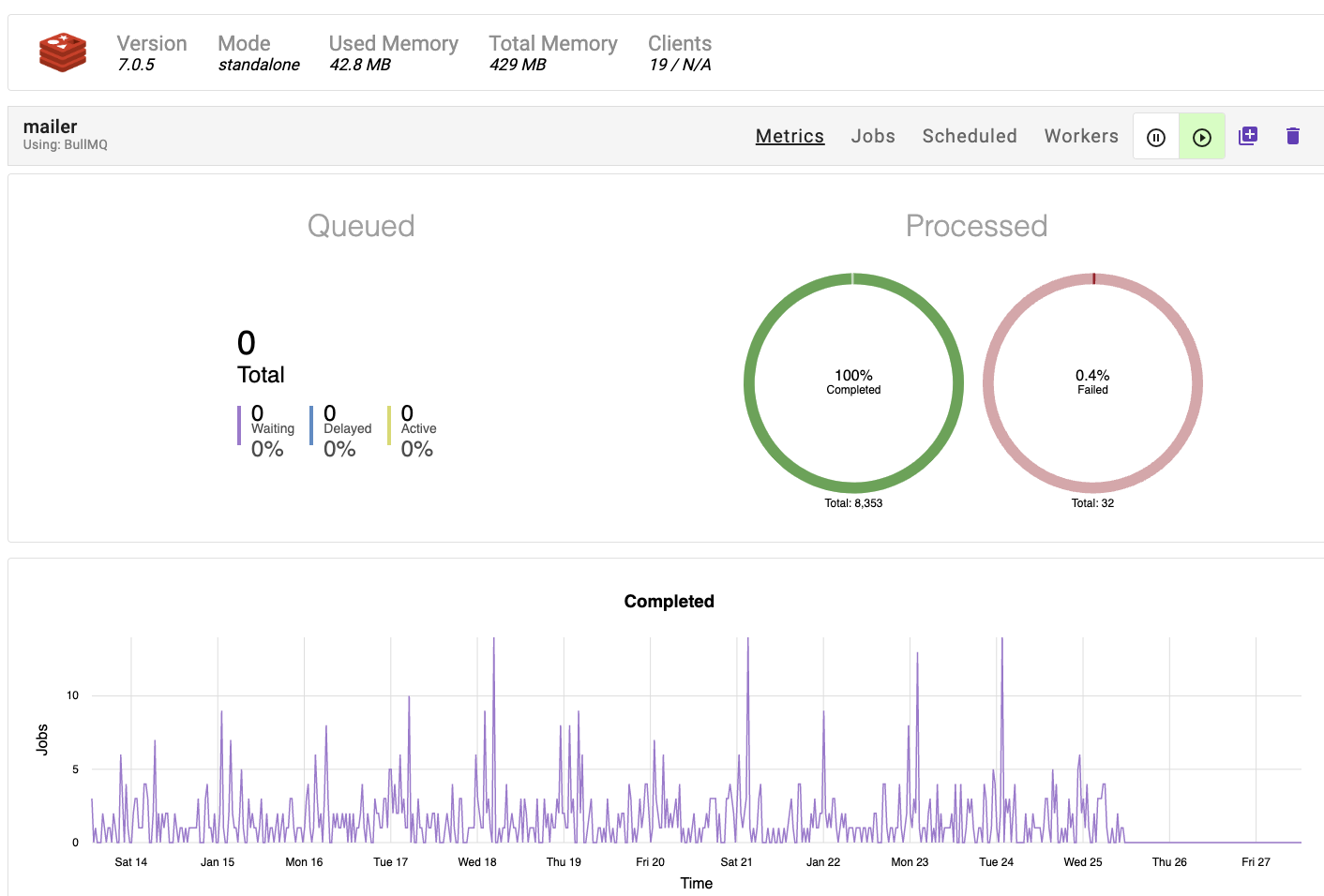

Dashboard

As your queues grow and your system becomes larger, it is important to have a good overview of what is going on. You can use BullMQ with Taskforce.sh to gain better insights in your queues and powerful debugging and monitoring tools to manage your jobs.

By subscribing to Taskforce.sh you are supporting the future development of BullMQ.